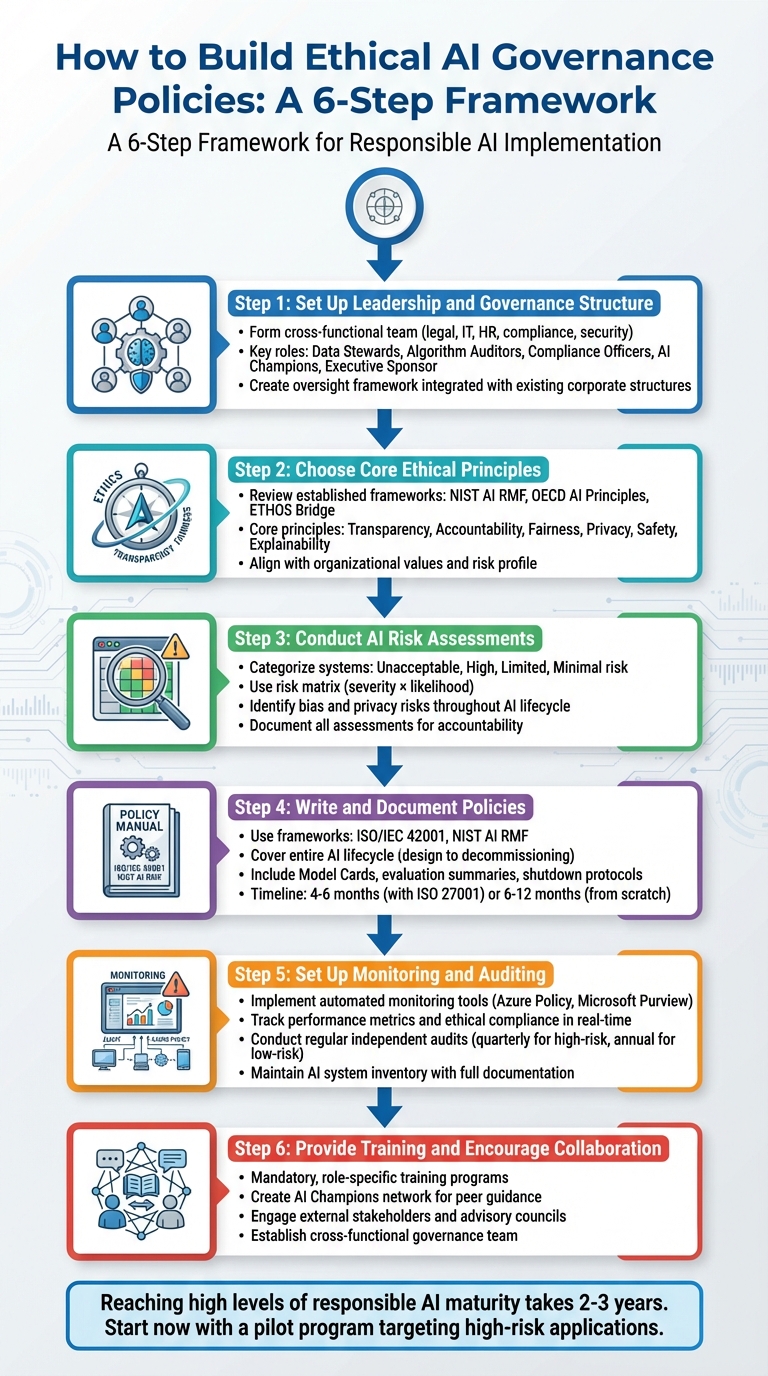

AI is transforming industries, but without clear oversight, it can lead to issues like bias, privacy breaches, and legal risks. Companies that prioritize ethical AI governance not only comply with regulations but also build trust and reduce risks. Here's a simple roadmap to get started:

- Leadership Framework: Form a cross-functional team (legal, IT, HR, compliance, etc.) to oversee AI policies and assign clear roles like Data Stewards and Algorithm Auditors.

- Ethical Principles: Use established frameworks like NIST AI RMF or OECD AI Principles to guide decision-making.

- Risk Assessments: Evaluate AI systems for risks (bias, privacy issues) and categorize them as Unacceptable, High, Limited, or Minimal.

- Policy Development: Draft detailed policies for every AI lifecycle phase using standards like ISO/IEC 42001.

- Monitoring & Auditing: Implement automated tools to track AI performance and conduct regular audits for compliance.

- Training & Collaboration: Provide ongoing training tailored to roles and engage external experts for diverse perspectives.

6-Step Framework for Building Ethical AI Governance Policies

AI Governance Simplified: From Zero to Pro

sbb-itb-97f6a47

Step 1: Set Up Leadership and Governance Structure

Creating ethical AI governance begins by bringing together the right team. This means forming a cross-functional group that includes representatives from legal, IT, HR, compliance, security, product development, and senior management. The goal is to build a structure where different perspectives come together to spot risks and opportunities that might otherwise slip through the cracks. This collaboration lays the groundwork for crafting policies that are both comprehensive and practical.

Define Roles and Responsibilities

Accountability is key to effective oversight. Assigning specific roles ensures everyone knows their part in maintaining ethical AI practices:

- Data Stewards focus on maintaining data quality and safeguarding privacy.

- Algorithm Auditors evaluate AI models for performance, bias, and ethical alignment.

- Compliance Officers track regulations like the EU AI Act to ensure legal standards are met.

- AI Champions, embedded within business units, guide their teams on ethical considerations specific to their domains.

- An Executive Sponsor provides resources and has the authority to enforce decisions.

"AI governance is simply a framework of rules, practices, and policies that direct how your organization manages AI technologies." – Fisher Phillips

It’s essential to document who has the authority to shut down an AI system if it malfunctions or behaves unethically. Successful organizations often balance centralized standards with decentralized implementation. For instance, an AI Center of Excellence can set overarching policies while allowing individual teams to adapt these guidelines to their specific workflows. This clear division of responsibilities ensures every department plays a role in maintaining ethical oversight.

Create an Oversight Framework

Once roles are established, the next step is integrating them into a structured oversight framework. Instead of building separate systems, embed AI governance into your existing corporate structures. For example, connect the AI committee with existing risk and audit committees to streamline escalation processes and reduce redundant oversight. While the board of directors tackles high-level reputational risks, audit committees can focus on how AI impacts internal controls, such as financial reporting.

Incorporate checkpoints for AI governance at critical stages like design reviews, testing, and prelaunch approvals. Use automated tools to detect biased data or privacy issues early on, and establish feedback loops so users and operators can report concerns. This integrated approach brings together people, processes, and technology to create a strong foundation for ethical AI governance.

Step 2: Choose Core Ethical Principles

Once your governance structure is set, the next step is to identify the ethical principles that will guide your organization in managing AI risks and maintaining compliance. These principles act as a framework for how you develop, deploy, and monitor AI technologies. Instead of starting from scratch, you can leverage established frameworks that have already been vetted by experts, government bodies, and global organizations.

Review Established Frameworks

For U.S.-based companies, the NIST AI Risk Management Framework (AI RMF) is an excellent starting point. This framework was developed over 18 months with input from over 240 organizations spanning industry, academia, and government. It highlights seven key characteristics of trustworthy AI: valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair.

The framework also emphasizes that AI systems are both "technical and social" in nature, meaning risks arise not only from technical flaws but also from societal factors and human behavior.

"AI risk management is a key component of responsible development and use of AI systems. Responsible AI practices can help align the decisions about AI system design, development, and uses with intended aim and values." – NIST AI RMF 1.0

For organizations operating on a global scale, the OECD AI Principles and UNESCO Ethics guidelines provide a broader perspective aligned with international standards. Another useful tool is the ETHOS Bridge Framework, which translates NIST's principles into actionable steps through nine pillars, including societal well-being and stakeholder engagement. These frameworks are especially helpful for turning high-level ethical goals into practical measures. By analyzing these frameworks, you can identify the principles most relevant to your organization's needs and risks.

Compare Key Principles Across Frameworks

Certain ethical principles appear consistently across different frameworks, making it easier to align them with your organization's values and risk management strategies. Here's a comparison of some key frameworks:

| Framework | Key Principles | Primary Focus |

|---|---|---|

| NIST AI RMF | Valid & Reliable, Safe, Secure & Resilient, Accountable & Transparent, Explainable & Interpretable, Privacy-Enhanced, Fair | Technical and socio-technical trustworthiness |

| OECD AI Principles | Transparency, Explainability, Robustness, Security, Safety, Accountability | Global standards and economic policy |

| ETHOS Bridge | Accountability, Fairness, Transparency, Privacy, Reliability, Human Oversight, Societal Well-being, Compliance, Stakeholder Engagement | Applying ethics practically in organizations |

Step 3: Conduct AI Risk Assessments

Once your ethical principles are in place, the next step is to evaluate the risks your AI systems might pose. This isn't a one-and-done task - it starts before deployment and continues throughout the system's entire lifecycle. The goal is to identify potential issues like data quality problems, algorithmic bias, privacy concerns, and broader societal effects.

Methods for Risk Assessment

To assess AI risks effectively, categorize systems into four risk levels: Unacceptable, High, Limited, and Minimal. For example, systems with unacceptable risks - such as social scoring or indiscriminate facial scraping - should be outright banned. High-risk systems, like those used in hiring processes, healthcare, or critical infrastructure, demand rigorous oversight and mitigation strategies before they can be deployed.

"To conduct a risk assessment, an organization's AI governance team should first identify and rank the risks as unacceptable (prohibited), high, limited, or minimal, evaluate the probability of harm, implement mitigation measures to reduce or eliminate risks, and document the risk assessment to demonstrate accountability." – Arsen Kourinian, Mayer Brown

A practical tool for this process is a risk matrix, which maps the severity of harm against the likelihood of occurrence. A standard 3×3 matrix, for instance, scores risks from 1 (low) to 9 (critical). Systems scoring 6 or higher often require human oversight for decision-making. Combine these quantitative tools with qualitative approaches, like Data Protection Impact Assessments (DPIA) for personal data and Ethical Data Impact Assessments (EDIA) for broader societal impacts.

To deepen the analysis, bring together a team of experts from legal, technical, and ethical backgrounds. This cross-disciplinary approach can help identify potential harms, evaluate intended uses versus potential misuse, and consider the effects on both direct stakeholders (users, developers, decision subjects) and indirect ones (bystanders, regulators, society at large).

Identify Bias and Privacy Risks

After the initial risk assessment, zoom in on two critical areas: bias and privacy. Bias can creep into AI systems at several stages: from pre-existing bias in training data, to technical bias in how algorithms are designed, to new biases that emerge during deployment. To address this, ask AI vendors for proof that their systems were trained on diverse datasets. Conduct regular audits and establish channels for users to flag biased outcomes.

Privacy risks are another major concern, especially with generative AI systems that might expose private information or produce sensitive outputs. Document the entire data lifecycle to uncover potential vulnerabilities. Automated tools can help detect privacy violations and monitor re-identification risks in anonymized datasets. These measures align with the ongoing oversight strategies discussed earlier.

For organizations looking to build a more thorough AI risk assessment framework, expert advice and consulting services are available through the Top Consulting Firms Directory.

Step 4: Write and Document Policies and Procedures

Once you've completed your risk assessment, it's time to turn those insights and ethical principles into concrete policies. These policies form the backbone of your AI governance program, clearly outlining how your organization will manage AI systems throughout their lifecycle - from creation to decommissioning. As Fisher Phillips explains: "Governance is about building a process, following the process, and documenting the process". Having clear, well-documented procedures not only demonstrates accountability but also reassures regulators and auditors.

Use Policy Development Frameworks

Creating policies from scratch can be overwhelming, but you don't have to reinvent the wheel. Established frameworks like ISO/IEC 42001 and the NIST AI Risk Management Framework offer structured approaches to AI governance. ISO/IEC 42001 provides a certifiable standard tailored for AI management systems, while the NIST framework offers a voluntary, detailed guide to identifying and mitigating AI risks.

If your organization is already certified under ISO 27001, you're in luck. You can reuse about 50-60% of your existing documentation and processes when transitioning to ISO 42001. Both standards share a "Harmonized Structure" (Clauses 4-10), which simplifies integration. However, there's a key difference: "The biggest conceptual shift from ISO 27001 to ISO 42001 is moving from protecting information to governing autonomous decision-making systems," notes Saravanan G, Vice President of Cyber Assurance at Glocert International.

How long does it take? It depends on your starting point. Organizations with a strong ISO 27001 foundation can achieve ISO 42001 certification in 4-6 months, while those starting fresh may need 6-12 months. The process typically begins with a 30-day initial assessment and can take up to a year for full integration. These frameworks provide a structured path, making it easier to draft detailed policies and procedures in the next step.

Include Specific Guidelines

Your policies should cover every phase of the AI lifecycle, with actionable and specific requirements. Standardized templates - like Model Cards and evaluation summaries - help ensure consistency across projects. For each AI initiative, document the business purpose, data sources, ethical and legal considerations, and operational safeguards, such as shutdown protocols and release approvals.

High-risk applications require even more detailed guidelines. Specify when human oversight is mandatory and how interventions should be recorded. This is essential because over 75% of businesses flagged during regulatory checks lacked proper documentation for AI decisions, exposing them to potential fines. Comprehensive documentation not only ensures compliance but also builds trust with stakeholders. With these policies in place, you're ready to move on to establishing strong monitoring and auditing systems.

Step 5: Set Up Monitoring and Auditing Mechanisms

Once you've documented your policies and completed risk assessments, the next step is to ensure your AI systems remain secure and aligned with ethical standards throughout their lifecycle. Policies alone won't cut it - ongoing monitoring and regular audits are critical. Since AI systems adapt and learn from new data, they can develop issues like model drift or unexpected behaviors. That's why real-time monitoring is a must to keep performance and ethical compliance on track.

Set Up Monitoring Systems

Automated tools are a cornerstone of effective oversight. Platforms like Azure Policy and Microsoft Purview can enforce governance rules across AI deployments, responding instantly to violations. These tools can identify problems such as biased data, inappropriate content generation, or privacy breaches - without relying solely on manual reviews. For example, Azure AI Content Safety actively filters harmful content like hate speech or violent material in real time, while Microsoft Purview Communication Compliance scans user prompts and AI responses to flag risky interactions.

A robust monitoring setup should include both automated logging of metrics (like error rates and accuracy) and qualitative feedback from users and stakeholders. Using a "golden dataset" as a benchmark for testing is highly effective, and setting performance thresholds can help flag when retraining or manual reviews are needed. For high-stakes applications, such as healthcare or finance, quarterly risk assessments are recommended, while lower-risk systems might only need annual reviews. Clear incident response protocols are also essential, with defined escalation paths, shutdown procedures, and communication guidelines. These measures create a strong foundation for the independent evaluations discussed in the next step.

Conduct Regular Audits

While automated monitoring is essential, independent audits provide an extra layer of accountability by offering an objective review of technical performance and ethical practices. These audits, conducted by external experts or uninvolved internal teams, can uncover blind spots that development teams might miss. Tools like Microsoft Purview Compliance Manager simplify this process by providing regulatory templates for frameworks like the EU AI Act, ISO/IEC 42001, and the NIST AI RMF, automating compliance assessments. Additionally, tools like PyRIT can test AI systems for vulnerabilities, while ModelDB tracks model versions and metadata to ensure full auditability [12, 17].

Audits should go beyond technical checks to evaluate ethical considerations as well. Maintain an up-to-date inventory of all active AI systems, complete with documentation and contact details for accountability. Include mechanisms for "appeal and override", allowing humans to review and adjust AI decisions when necessary. Conducting tabletop exercises to simulate incident responses can prepare your team for real-world challenges. Finally, publishing regular transparency reports that outline AI usage, incidents, and improvement efforts can help build trust with stakeholders and demonstrate compliance with regulations.

If you're looking for professional guidance to enhance your monitoring and auditing systems, check out the Top Consulting Firms Directory for expert advice.

Step 6: Provide Training and Encourage Collaboration

Policies only work when your team understands and applies them effectively. That’s why mandatory, ongoing training is a must. Training should cover both the "what" and the "why" behind your responsible AI requirements. Annual refreshers help employees stay updated with new technologies and regulations. Instead of generic sessions, tailor training to specific roles: executives might need a high-level understanding of risk management, while technical teams benefit from hands-on training with bias testing and "ethical by design" tools.

Create Ethics Training Programs

Training brings your governance frameworks to life by showing employees how to apply them in practice. Scenario-based learning can help employees navigate real-world challenges, such as biased hiring algorithms or chatbots producing inappropriate content. These exercises teach them how to identify risks and apply mitigation strategies before issues escalate. Another effective method is creating a network of "AI champions" - team members trained to offer peer-to-peer guidance on ethical concerns specific to their roles. Additionally, an internal "case law" repository can document past decisions, trade-offs, and risk thresholds, offering teams a practical guide for handling similar dilemmas in the future.

Training should also foster collaboration between departments. Legal, policy, UX, and engineering teams need a shared understanding of concepts like "fairness" and "transparency" to ensure clear communication. Establish clear channels for reporting ethical concerns so employees and end-users know how to flag potential issues. To reinforce ethical behavior, tie it to performance reviews and promotion criteria. Once internal teams are equipped, it’s time to expand your efforts by engaging external collaborators.

Engage Stakeholders

Collaboration with external experts strengthens your ethical AI initiatives. Partnering with industry specialists, advisory councils, and professional networks ensures you stay informed about evolving standards like the EU AI Act or ISO/IEC 42001. These external perspectives can help identify gaps or blind spots that internal teams might overlook. Establish a cross-functional governance team or an AI Center of Excellence with representatives from legal, security, product, HR, and engineering to ensure diverse viewpoints are considered. A hub-and-spoke model works well here: centralized standards maintain consistency, while individual business units adapt policies to their specific needs.

"Most 'AI principles' fail at the last mile. This playbook shows how to wire principles into org reality - budgets, launch gates, metrics, incentives, and cross-team rituals." - Jakub Szarmach, Author, CDT Playbook

Regular training aligns with oversight protocols, ensuring ethical practices remain effective and relevant. Transparency reports and audits also play a key role in building trust. Sharing details about AI usage, incidents, and improvement efforts demonstrates accountability. For expert guidance on training programs or finding external partners, check out the Top Consulting Firms Directory.

Conclusion and Next Steps

Ethical AI governance isn’t a one-time task - it’s an ongoing journey that demands consistent effort. The encouraging part? You don’t have to wait for flawless regulations or a fully prepared organization to begin. Start small by launching a pilot program targeting a few high-risk AI applications. Use the governance frameworks mentioned in Step 1 as your foundation, and expand as you discover what works best.

"The time to start is now: our research indicates that reaching high levels of RAI maturity takes two to three years. Waiting for AI regulations to mature is not an option." - Steven Mills, Ashley Casovan, and Var Shankar

A logical next step is performing a "shadow AI" inventory. This builds on the risk assessment process from Step 3 and involves identifying all AI systems currently in use within your organization. This baseline not only maps out your current AI landscape but also highlights areas of potential risk. Following this, establish a cross-functional AI Governance Committee. Including representatives from legal, IT, HR, and compliance ensures your policies are shaped by a variety of perspectives.

Adopt and adapt frameworks like the NIST AI Risk Management Framework or OECD Principles to align with your organization’s specific risk profile. These frameworks should remain dynamic, evolving through regular reviews to incorporate emerging regulations such as the EU AI Act or ISO/IEC 42001.

If you’re looking to accelerate progress, consider consulting with industry specialists. The Top Consulting Firms Directory is a great resource for finding expert guidance tailored to your needs. External consultants can assist with independent audits, navigating complex regulatory requirements, and speeding up your journey toward responsible AI maturity.

FAQs

What roles are essential for effective AI governance?

Creating a solid framework for AI governance starts with assembling a team that has well-defined roles and responsibilities. This structure ensures that AI systems are used ethically and comply with regulations.

Key players include an internal review committee or oversight body. Their job? To assess AI projects, manage potential risks, and maintain ethical standards. At the leadership level, roles like AI strategy leaders or executive sponsors are essential. These individuals integrate governance into decision-making processes and ensure accountability at every step.

On the operational side, professionals such as data scientists, AI developers, and compliance officers are crucial. They focus on implementing and monitoring AI systems, ensuring these systems align with established guidelines and policies.

By bringing together these diverse roles, organizations can promote transparency, fairness, and regulatory compliance throughout the AI lifecycle. This approach not only helps manage risks effectively but also supports responsible progress in the field of AI.

How can businesses minimize bias and protect privacy in their AI systems?

To reduce bias and protect privacy in AI systems, companies should adopt ethical AI governance policies that emphasize transparency, accountability, and proactive risk management. A good starting point is assessing the quality of training data to uncover and address any biases. It's also important to regularly monitor AI models to ensure they stay fair and comply with privacy laws.

Involving cross-functional teams to manage AI projects can help tackle ethical challenges and promote responsible practices. Leadership plays a key role here - executives need to actively support ethical AI efforts and ensure that policies keep pace with changing regulations and standards. By integrating these steps into the AI development process, businesses can create systems that are fair, secure, and deserving of trust.

What are the key frameworks for creating ethical AI governance policies?

Several frameworks offer practical guidance for creating ethical AI governance policies. One standout example is the ETHOS Bridge Framework, a research-driven system designed to help organizations align with major regulations, such as the EU AI Act and NIST AI RMF. It focuses on evidence-based practices, enabling businesses to manage AI-related risks more effectively.

Another valuable resource is the AI Governance Playbook from the Council on AI Governance. This playbook dives into key areas like AI strategy, risk management, and workforce training, offering actionable advice for ensuring responsible AI implementation. Additionally, frameworks like the NIST AI RMF and Capgemini’s specialized guides emphasize creating policies that reflect organizational values while fostering transparency and accountability.

Together, these frameworks provide businesses with essential tools to incorporate ethical principles into their AI systems and stay compliant with shifting regulations.